Small Models, Big Classrooms, Massive Gains

…but first here’s what’s popping in AI

…but first here’s what’s popping in AI

From Big Tech’s billion-dollar bets to free Swahili models, the AI news cycle is heating up. Here’s what matters:

Big Tech is shaping the agenda: Teacher tools, higher-ed pilots, and smaller edge-ready models are where the majors are betting. Google just launched an AI for Education Accelerator with a $1B higher-ed commitment, and Microsoft dropped its Back-to-School EDU updates plus the 2025 AI in Education Report.

Trust is still fragile: Karnataka’s AI attendance pilot is facing privacy concerns, a reminder that governance and consent are non-negotiable for sustainable adoption.

Teacher capacity is the frontier: OpenAI Academy in India plans to train one million teachers in GenAI, showing what large-scale PD could look like in the Global South.

Local languages are breaking through: Swahili Gemma models were launched, a signal that equitable AI is moving beyond English-first contexts.

Major glow up: Automatic speech recognition gets a huge upgrade with NVIDIA’s latest open source ASR model supporting 25 languages and transcribing 3 hours of audio in one go.

Safety is going cross-lab: In a rare move, OpenAI and Anthropic ran joint safety tests on each other’s models, signalling the start of shared benchmarks and multi-actor guardrails.

Voice systems level up: OpenAI’s new GPT-Realtime delivers low-latency speech interactions and streaming APIs. This brings AI tutors closer to real-time dialogue and coaching, while raising questions about evidence, access, and guardrails.

Africa builds AI capacity: Strive Masiyiwa is advancing Africa’s first “AI factory” with NVIDIA, deploying 3,000 GPUs in South Africa and planning rollout across Egypt, Kenya, Nigeria, and Morocco. This marks a breakthrough for local compute capacity and digital autonomy.

Living on the Edge: Bringing AI to offline schools

Today’s flex: Edge AI, aka how to bring powerful models into classrooms without needing 3G.

What’s the hype? Imagine scoring oral reading ability in real-time with a short audio clip, spinning up a localised worksheet in seconds, whispering coaching tips while listening to classroom talk, or running adaptive practice on a cheap Android, all without touching the internet. And it’s not just hype. Edge AI is beginning to appear in LMIC classroom pilots (see our Field Note), signalling what might be possible. The big question is whether the builders and deployers of these tools can keep them light and fresh enough to stick. They also need to work in local languages and with classroom realities, or risk ending up as another cool demo that never scaled.

“There’s a gravitational pull toward the edge… running computing where the data is created is usually the most efficient, effective and secure way to get things done.”

How Edge AI works

Edge AI means running models directly on phones, tablets, laptops, or school servers instead of the cloud, made possible by a new wave of small language models (SLMs).

The promise: faster responses, offline use in low- and no-connectivity schools, and stronger privacy since data stays on the device.

The catch: models that fit on a phone today can clog it tomorrow, and once they go stale they risk becoming classroom clutter instead of support.

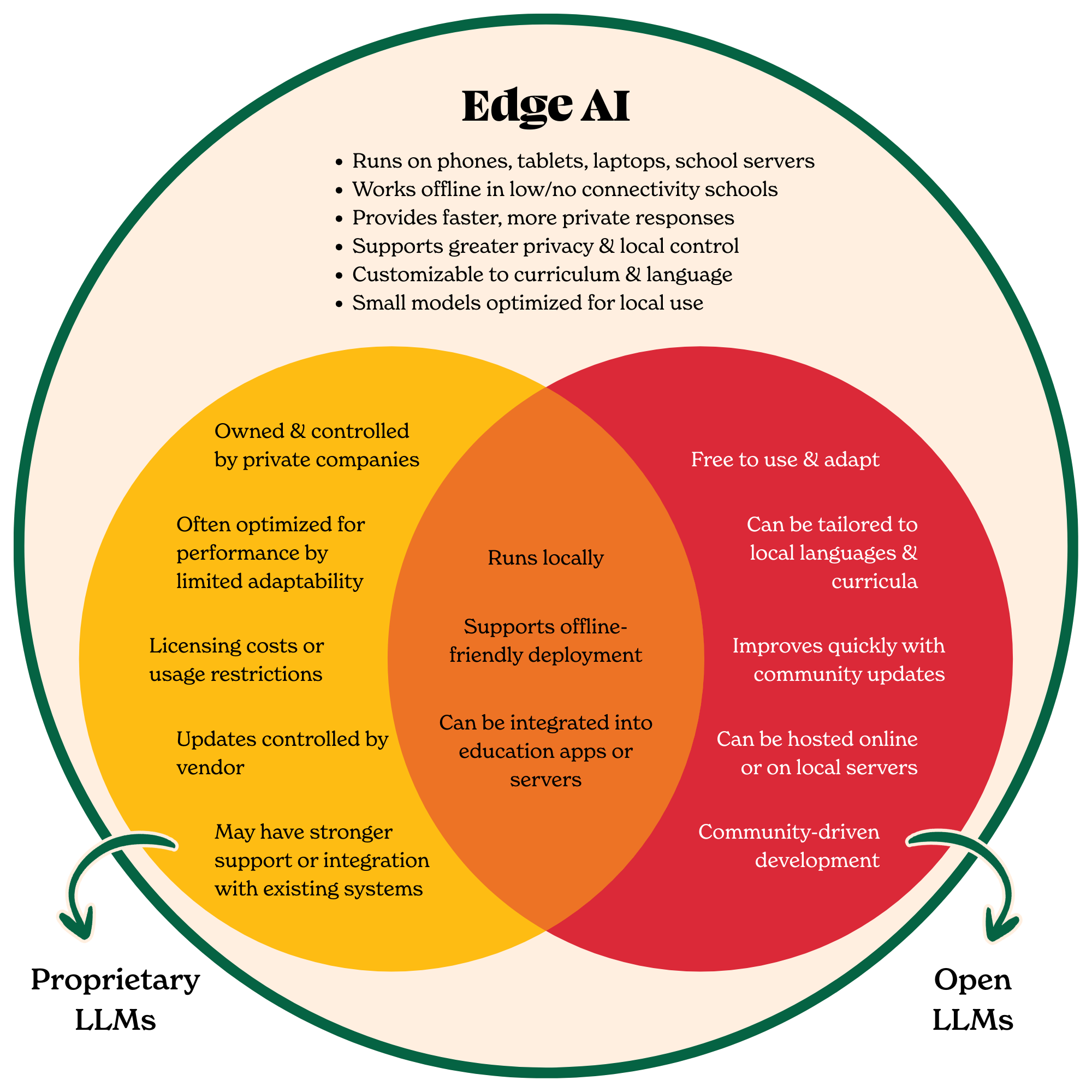

In an upcoming newsletter, we’ll deep dive into the topic of open source (or “open weight”) LLMs, which many governments in SSA are asking about. But for now, here’s a quick guide as to what models Edge AI can use, and how that relates to open and closed LLMs:

What this means for education

Teaching at the Right Level (TaRL)

What the evidence says: TaRL has a strong track record in RCTs, but scaling it in global classrooms remains difficult. Leading sources flag recurring barriers:

The mechanics. Assessing large numbers of students, grouping by skill level, and transitioning children between groups without losing instructional time is hard.

The system design problem. Without a dedicated time slot and ongoing monitoring, teachers default back to grade-level instruction.

The classroom reality. Overcrowded classes and uneven student participation make assessment and regrouping cycles hard to sustain.

Together, these point to a central challenge. Regrouping is fragile because it depends on consistent teacher decisions inside difficult conditions, and systems do not always reinforce those choices.

What the opportunity is: Edge AI could help in a few ways:

Lightening the mechanics. Small, offline models on low-cost devices could give instant diagnostic scores, auto-group students by level, and refresh groups in minutes. This would cut down the assessment and regrouping burden that teachers face in large classes.

Understanding teacher decision-making. Edge AI could help surface patterns in how teachers adapt, sustain, or abandon TaRL routines by processing classroom data locally and generating insights for teachers and coaches. This is still experimental and raises questions about feasibility and privacy, but it points toward a behaviour-informed lens that could strengthen fidelity at scale.

Solving the entire equation. Edge AI could reconfigure the system itself, embedding diagnostics, regrouping, and adaptive practice into timetables, EMIS, and procurement so they become built-in routines, not fragile add-ons.

Emerging signals:

Pratham’s PadhAI app uses offline ASR to check early-grade reading in local languages across India.

Tangerine is being used by tens of thousands of teachers around the world as an offline-first assessment platform, which includes auto-scoring and grouping features.

In Guatemala, Intel is piloting offline Edge AI on in-school devices to give students instant feedback, a model that could ease the assessment and regrouping burden at the heart of TaRL.

Why this works: The sticking point in TaRL is not whether teachers believe in the model, but whether they can realistically sustain the assessment and regrouping cycle week after week. Edge AI can trim down the grind of diagnostics and grouping, while also opening a path, although still tentative, to better understand the behavioural and systemic dynamics that shape teacher decisions.

Teacher coaching & support

What the evidence says: Coaching moves the needle on teaching quality, but it’s fragile because it typically hinges entirely on people. Skilled coaches are often in short supply, visits are expensive, and turnover is high. When support drops due to the need to hit better cost-efficacy numbers, so does the trust and accountability that made coaching effective.

What the opportunity is: Edge AI can’t and shouldn’t replace the human relationship, but it can take pressure off by handling routine tasks. With small models running offline, coaches could:

Transcribe classroom audio to flag teacher talk time or student participation.

Auto-generate observation notes teachers can review the same day.

Access offline dashboards so scarce coaches focus on schools that need the most attention.

Emerging signals: New tools are starting to show how coaching could run on the edge.

RTI’s Loquat uses on-device machine learning to capture who is speaking in class so teachers get instant feedback on talk time and participation.

TeachFX gives teachers automated feedback on classroom dialogue by analyzing audio of their lessons. It has mostly been used in high-connectivity settings, but its approach could be adapted for edge-ready deployment, making instant coaching feedback possible even without the cloud.

Hodari Vision is testing smart glasses that analyse classroom interactions in real time, pointing to a future where observation data can be captured and interpreted directly in the room without relying on cloud services.

Why this works: Coaching bottlenecks are about scarcity and inconsistency. Edge AI frees coaches from clerical work and gives teachers actionable feedback between visits. Trust stays with humans, while edge tools stretch scarce capacity further.

Field note

Bookbot, in collaboration with the University of Dar es Salaam, is piloting a digital reading tutor tailored for Swahili-speaking students. The tool combines on-device speech recognition with locally contextualized content, and has shortened the training process for Kiswahili from years to just a few months. It’s an early but concrete example of localised Edge AI already landing in LMIC classrooms.

Playbook for action

For funders - Put your money where the model is. Back Edge AI pilots that have a credible path to scale. That means funding local language adaptation, teacher training, and update pathways.

For operators - Bake Edge AI into the school day. Think about how apps are downloaded and updated, how devices stay charged, and how teachers actually use them.

For governments - Flex your procurement muscle. Write Edge AI into tenders by requiring offline deployment, on-device functionality, and local language support. A few smart clauses can push vendors to design tools that stay resilient when the internet drops.

Hype-check / Big no-no’s

Mind the gap. Unlike cloud-based tools that can be shared over a single connection, Edge AI delivers its value on-device. If devices are unevenly distributed or poorly maintained, only some learners get the benefit while others are left behind.

It’s not fire-and-forget. Beware the “install once and done” myth. Without local repair, updates, charging, and teacher training, devices risk becoming paperweights, just like with the last 3 decades worth of EdTech interventions!

Trust is fragile. Teachers won’t buy into a black box. With Edge AI, building confidence means pairing systems with human-in-the-loop review and giving teachers simple controls so the tools feel like an assistant, not an authority.

Bundle up. Edge AI in schools isn’t plug-and-play. It basically means either bundling models into the hardware you distribute, or putting in the work to get an app that contains AI models onto the devices schools already have.

Over the Edge

Here’s the stretch vision we’re seeing.

Imagine if the power of today’s smartest models could run straight on a school tablet or a teacher’s phone. This is not pure sci-fi. Apple already ships small models on phones. Google does too, via Gemini Nano on Pixel phones accessible via ML Kit GenAI APIs. Microsoft’s Phi 3 is built to run on device, rivalling much larger models. Open-source projects have even squeezed Llama 3 onto a handset. These small language models won’t do everything the cloud models can, but for targeted tasks like regrouping learners or checking fluency, they can be sharp and cheap. And for schools in SSA and other LMICs, that means tasks that once required expensive cloud access could soon run entirely offline. The pace of progress is wild. What felt out of reach a year ago is now giving possible.

Contributors to this issue of the newsletter include Sara Cohen, Shabnam Aggarwal, and Dr. Robin Horn.